- Author:

Jinkun Geng,Li Dan Distributed Machine Learning, Parameter Server, Ring Allreduce, Mesh-based Synchronization(MS)

1.What This paper talking about:

DML system PS MS RS all work in flat way and suffer when the network size is large.

In this work, we propose HiPS, a hierarchical parameter(gradient) synchronization framework.

Deploy it on server-centric network as BCube and Torus and this better

embrace RDMA/RoCE transport between machines.By Mathmatical calculation, we show that it reduces the synchronization time by 73% and 75% respectively.

2.What i learn:

Data-center network Topology

Server-centric network topology VS switch-centric network topology. First one better for RDMA. And avoid PFC problem.

server-centric network contains:

- BCube(更常用)

- Torus

- DCell

switch-centric network:- Clos Fat-Tree

State-of-art of synchronization algorithm:

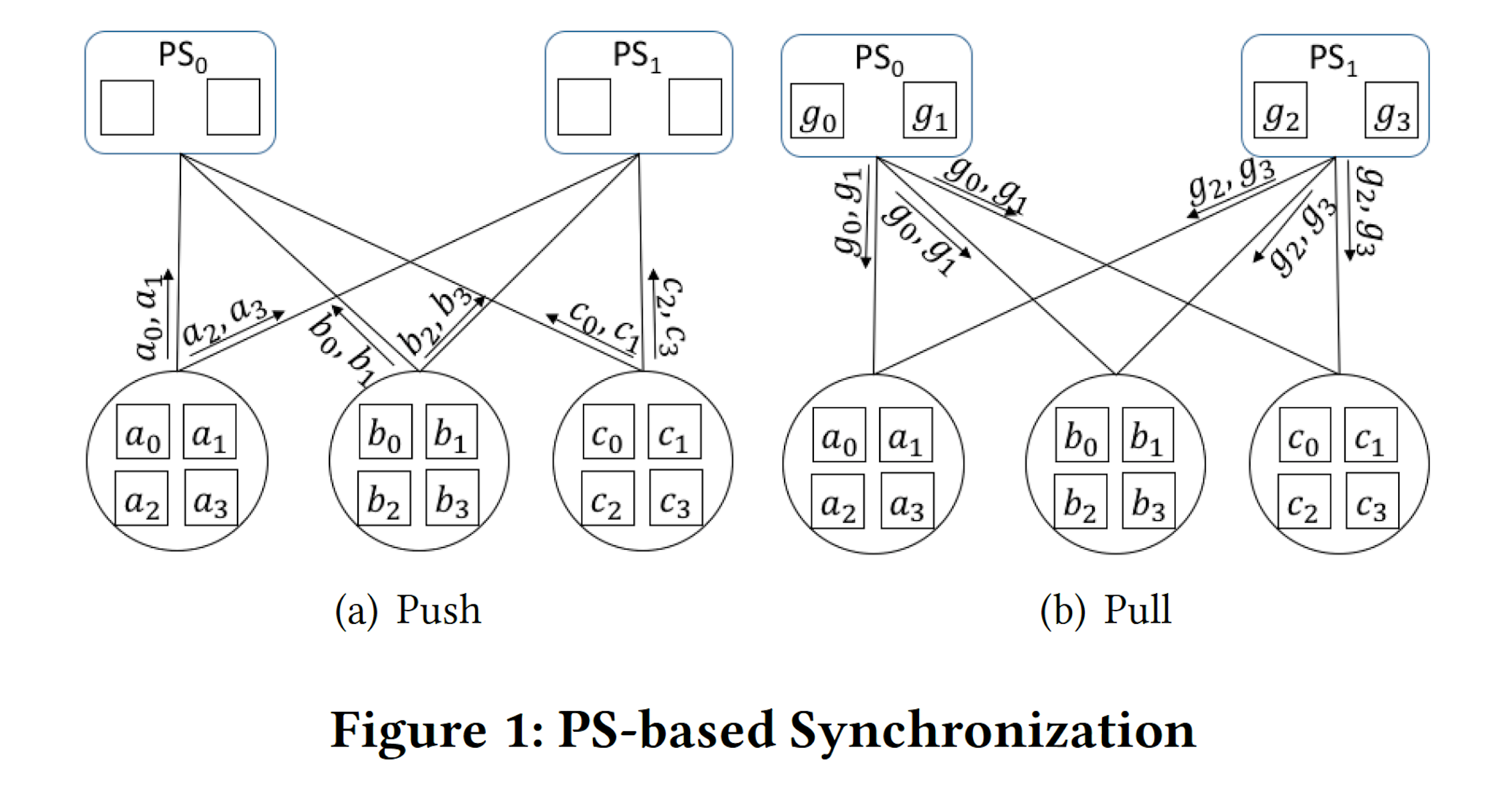

Parmeter Server (PS)

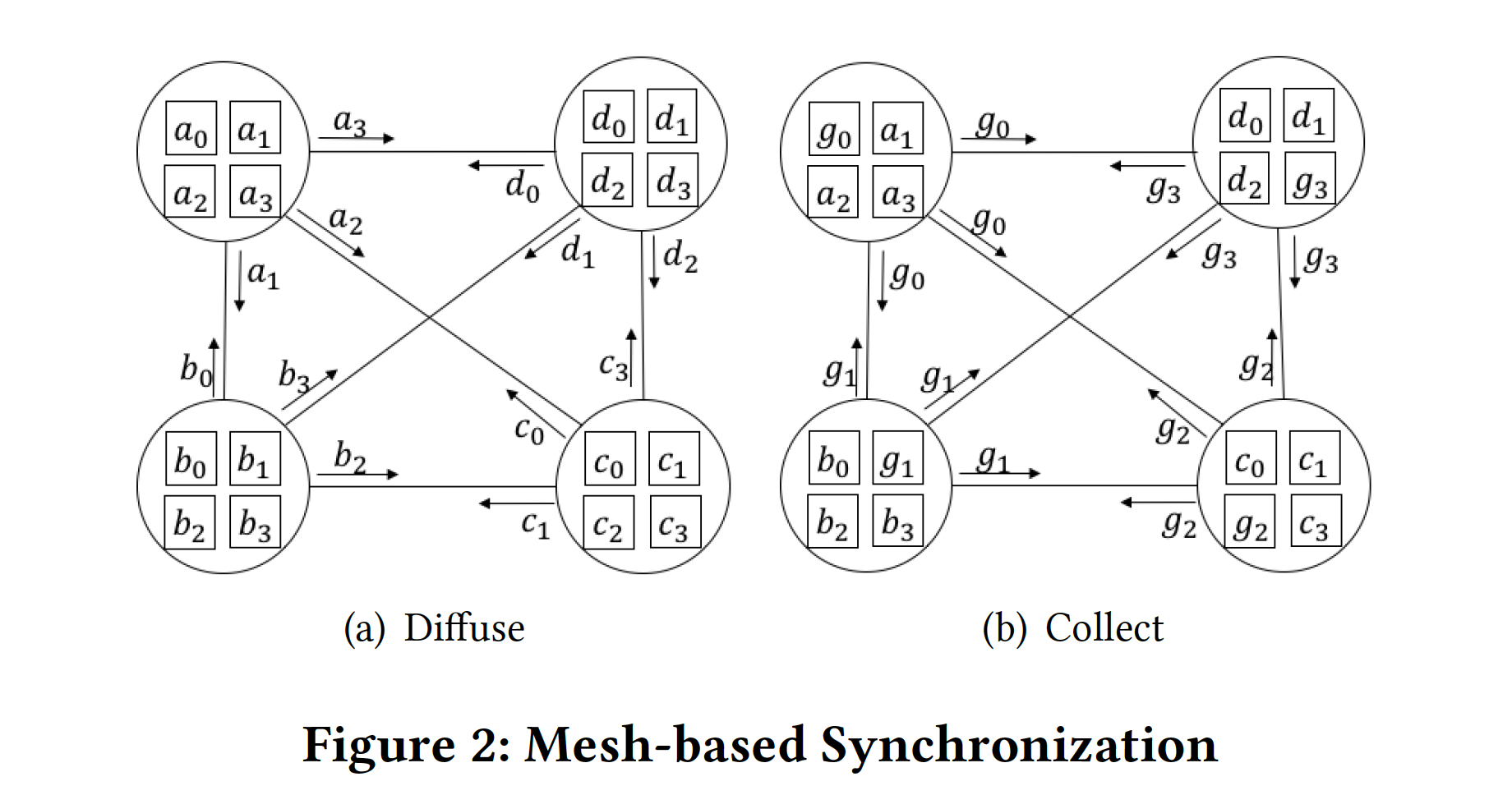

Push and PullMesh-based (MS)

Diffuse and Collect

mesh-based is a special situation of the parameter server when workers equals the servers.

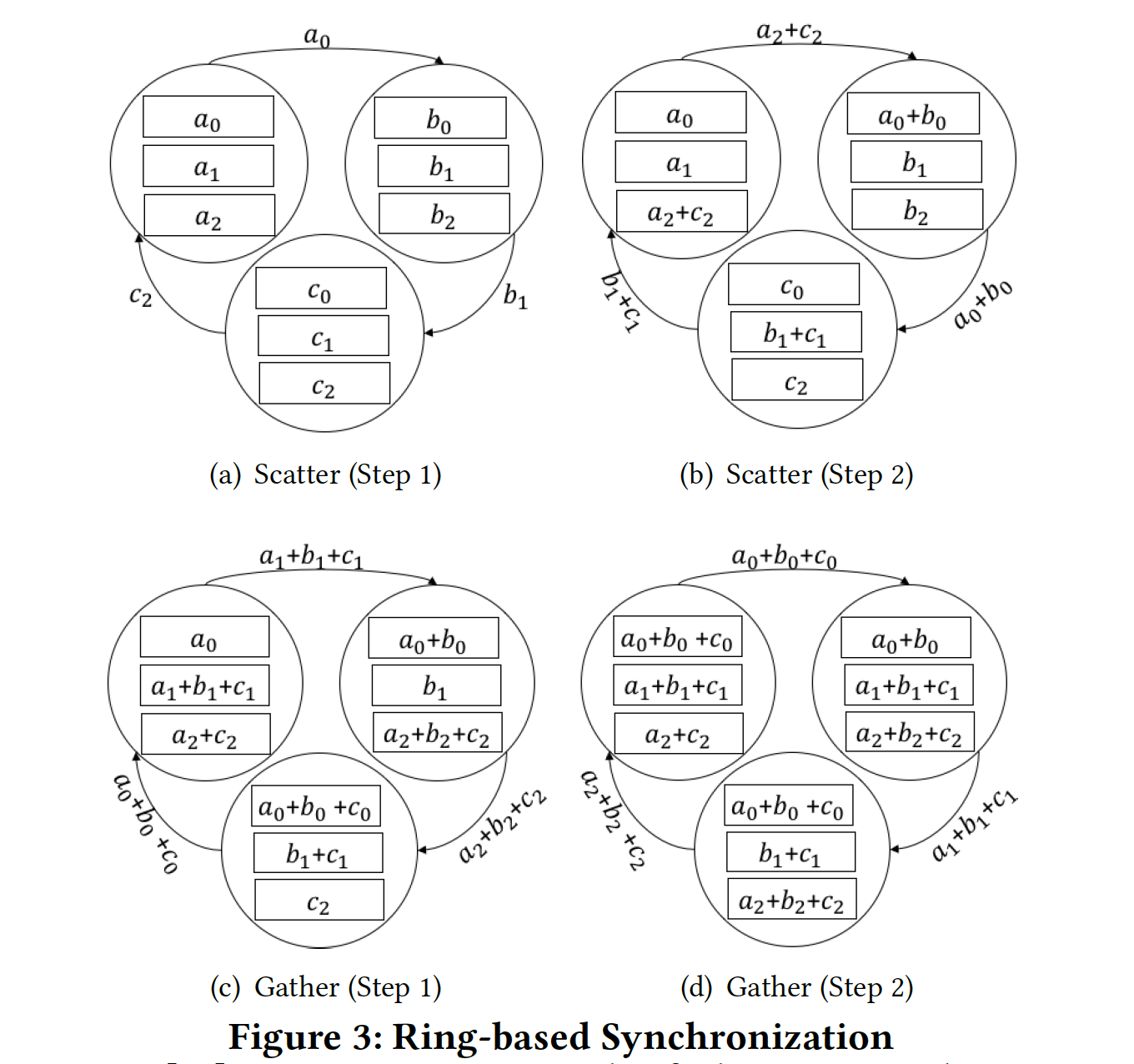

And get better load-balance among servers.Ring-based (RS)

- the total number of servers : N

- the number of servers in each group: n = N^(1/h)

- the number of hierarchical stages in HiPS : h

- the total size of gradient parameters on each server : W

- the bandwidth capacity of each NIC : B

=> Then the time of GST(global synchronization time) of RS = 2 (N-1) W / B N

Synchronous Parallel Model:

Since the computation performance and communication latency of servers (computing nodes)vary in large-sacle DML .

Bulk Synchronous Parallel Model(BSP)分布式机器学习包括四种同步机制:

包括BSP(Bulk Synchronous Parallel)批量同步并行、

Async(Asynchronous Parallel)全异步并行、

SSP(Stale Synchronous Parallel)延迟同步并行、

ASP大概同步并行。